Create your first table on Hive using data from CSV

What is Hadoop and Hive

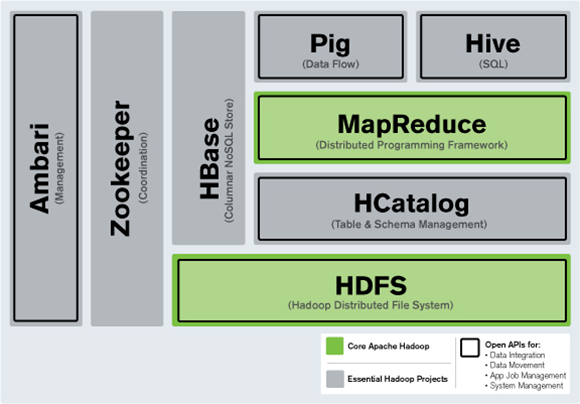

‘Apache Hadoop’ software library is a framework that allows for the distributed processing of large data sets across clusters of computers using simple programming models. It is designed to scale up from single servers to thousands of machines, each offering local computation and storage. Rather than rely on hardware to deliver high-availability, the library itself is designed to detect and handle failures at the application layer, so delivering a highly-available service on top of a cluster of computers, each of which may be prone to failures.

Whereas ‘Apache Hive’ is a data warehouse software which facilitates reading, writing, and managing large datasets residing in distributed storage using SQL. Structure can be projected onto data already in storage.

For more detailed reference — https://betterprogramming.pub/hadoop-vs-hdfs-vs-hbase-vs-hive-ddfffd45d222

Pre-requisite

Hadoop and Hive installed and configured, if not please follow the below installation guides.

Hadoop Installation

Hive Installation

Getting-Started

What we will be doing in this section is to download a CSV file from here in our local machine and transfer it to hdfs and create a hive view over it to query the data with plain SQL.

Fire up your distributed file system using below command-

start-dfs.shCreate a directory named ‘bds’, here we will be putting all the downloaded csv

hadoop fs -mkdir /user/harssing/bds/Now, we need to transfer the local downloaded CSV file to the above created directory mentioning the source and target location. Source being the local machine and target being the hadoop cluster directory.

hadoop fs -put /user/harssing/downloads/yellow_tripdata_2019-01.csv /user/harssing/bds/Hive Console

Fire up the hive console using command ‘hive’ and after it loads up we will create a temporary table and then load the CSV file into the table we just transferred.

Create table u_harssing.cabs

(VendorID int, pickup timestamp, dropoff timestamp, passenger_count int, trip_distance float, RatecodeID int, store_and_fwd_flag string, PULocationID int, DOLocationID int, payment_type int, fare_amount int, extra int, mta_tax int, tip_amount int, tolls_amount int, improvement_surcharge int , total_amount int, congestion_surcharge int)

ROW FORMAT SERDE 'org.apache.hadoop.hive.serde2.OpenCSVSerde' WITH SERDEPROPERTIES ( 'separatorChar'=',' ,'quoteChar' ='\"' )

STORED AS TEXTFILE

location 'hdfs:///user/harssing/bds/' TBLPROPERTIES('skip.header.line.count'='1');Run plain queries over this temporary table by executing-

select * from u_harssing.cabs limit 10;Copy data from temporary table to ORC table

Time to create a hive table which is in ORC format. The main advantage of an ORC format is to reduce the size of a table.

Create a ORC table using-

Create table u_harssing.cabs_orc

(VendorID int, pickup timestamp, dropoff timestamp, passenger_count int, trip_distance float, RatecodeID int, store_and_fwd_flag string, PULocationID int, DOLocationID int, payment_type int, fare_amount int, extra int, mta_tax int, tip_amount int, tolls_amount int, improvement_surcharge int , total_amount int, congestion_surcharge int)

STORED AS ORC;INSERT INTO u_harssing.cabs_orc SELECT * FROM u_harssing.cabs;

As we have created the ORC table and the underlying temporary table referencing the CSV files can be deleted including the CSV files.

ORC format reduces the data storage cost as it stores data in ORC i.e Optimised Row Columnar format.